Enabling more people to teleoperate ocean robots

Our Focus Group tackled the design challenges of enabling remote robot operations on land and at sea, using a multidisciplinary approach of robotic controls and human-robot interaction. We developed ways to reduce the cost and improve access to robot teleoperation interfaces, enabling very remote operators to perceive visual, auditory, and force-feedback information from robotic manipulators.

Research

Focus Group: Human-in-the-loop Ocean Robotics

Prof. Leila Takayama (University of California, Santa Cruz, now Hoku Labs and Robust.AI), Alumna Hans Fischer Fellow (funded by the Siemens AG) | Srini Lakshminarayanan (TUM), Doctoral Candidate | Host: Prof. Sami Haddadin (TUM, now Mohamed bin Zayed University of Artificial Intelligence)

(Image: University of California, Santa Cruz)

The problem:

Our understanding of the oceans has long been limited by our ability to access ocean depths, which are extremely dangerous for human bodies. There are some oceanographic institutes that can afford to deploy research vessels with ocean robot technologies, but they are few and far between. Performing deep sea navigation and manipulation tasks with these remotely operated vehicles (ROVs) is cognitively extremely demanding, even for the most talented pilots. Ocean science missions are incredibly diverse, e.g., producing 3-D maps of the sea floors, gathering geological samples, recording video of ocean creatures rarely or never before seen, and delicately sampling living creatures from the midwater.

Research goals:

These challenges inspired our Focus Group’s research program. How might we make it easier for ROV pilots to perform their remote manipulation tasks, expanding the pool of future robot operators? Even better, what if we could also make this possible for ocean robots operated without tethers, enabling people to fly ROVs more freely and possibly even from shore?

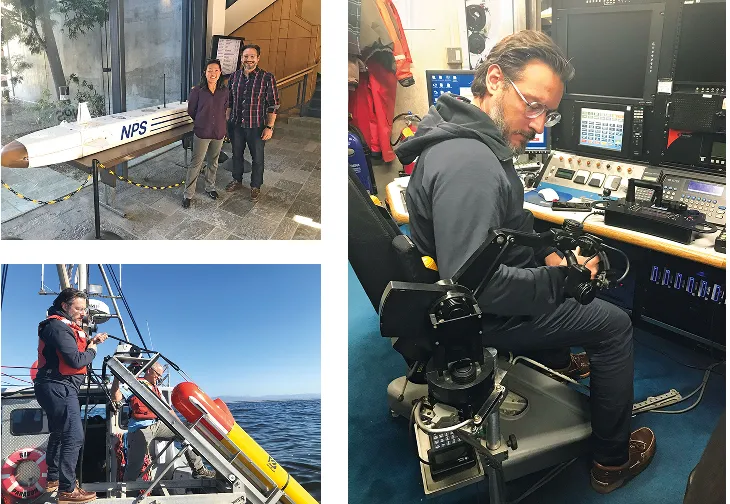

Figure 1

Our approach:

Using a multidisciplinary approach, we leveraged our expertise in robotics controls, cognitive psychology, and human-robot interaction to develop prototypes for our ocean science and engineering collaborators at the Monterey Bay Aquarium Research Institute (MBARI). We observed and interviewed ROV pilots and users of MBARI’s telepresence systems, designed and built prototypes of robot teleoperation interfaces for remotely operating robots, and conducted empirical studies of our prototype as well as other robot operation interfaces to evaluate their performance and identify fruitful directions for future research.

1. Understanding ocean robot piloting

Sami Haddadin visited MBARI and the US Naval Postgraduate School with Leila Takayama to get a hands-on feel for how they operate their ocean robots (Fig. 1). Subsequently, our Focus Group observed ROV piloting sessions and interviewed users of MBARI’s research vessel telepresence systems (2019–2022), including ocean scientists, ship’s crew, and ROV pilots [1]. We learned that ROV piloting is incredibly challenging, especially with the current interfaces that occupy the ROV control rooms. To effectively fly ROVs, it is critical for robot operators to have sufficient situation awareness, e.g., deciding how hard to push the sampler on the sea floor, figuring out how far forward to reach to capture the jellyfish, or managing the tension on the tether that connects the ROV to the ship.

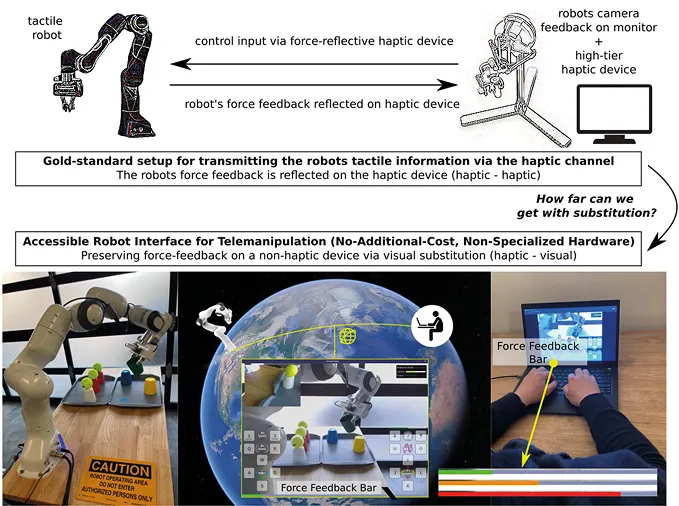

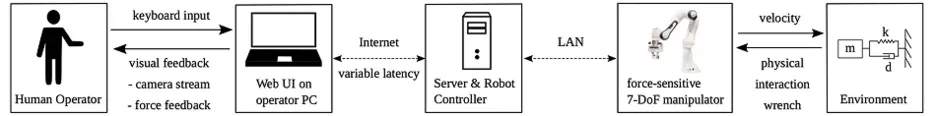

2. Exploring force feedback for ocean robot operators

While the gold standard for robot teleoperation uses high-precision force-feedback input devices, we took a low-cost approach to enhance the potential for increasing access to these types of jobs, using widely available personal computer hardware and software [2] (Figs. 2 and 3). Because people cannot feel force feedback with their home computing devices, we experimented with communicating force information through other modalities (e.g., visual and auditory). For our user study, we decided to use a visual bar at the bottom of their video display in the web browser, which became longer and redder when the robot arm experienced higher forces. In our user study, participants in Munich operated a robot arm on the TUM campus (slightly remote) and also operated a robot arm in Santa Cruz, California (very remote), flipping plastic cups and placing balls into those cups on a table. As expected, people performed better (i.e., placed more balls into cups) when operating the nearby robot than the very remote robot. When operating the very remote robot, people made fewer errors when they saw force feedback in the visual bar than when they were not presented with the visual bar. From this user study, we learned that visually rendered force-feedback information could improve very remote teleoperation of robot manipulators. This inspired us to explore other modes of sensory remapping (e.g., force information communicated via sounds, and depth information communicated via stereoscopic images [3]).

Figure 2

Figure 3

3. Enabling ocean robots to estimate forces

While our initial work was conducted on land, our follow-up studies were conducted underwater. Detecting forces experienced by ocean robots is especially challenging because there are many sources of strong forces to consider, including ocean currents and the robot’s tether. We investigated whether we could use current machine learning techniques to estimate forces on these ocean robots, using inertial measurement units (IMUs) on a relatively low-cost BlueROV in a TUM laboratory test tank [4]. Our model-based approach outperformed other methods (e.g., support vector regression, bidirectional encoder representations from transformer) for estimating the forces being experienced by the ROV. This groundwork can be used to enable more autonomous behaviors such as collision avoidance, even when there is high latency in the connections between remote operators and the ROVs. It can also be used as a sensory information channel for robot pilots.

Future work:

Thanks to the support of the TUM-IAS Fellowship, our Focus Group has built collaborative relationships across continents and institutions, enabling us to expand the capabilities of ocean exploration teams. The results of our initial studies have demonstrated that we can improve situation awareness for robot operators by leveraging different perceptual channels. Furthermore, we might be able to estimate the forces being experienced by these ocean robots so that we can convey that information to robot operators as well as use that information for enabling more autonomous on-board capabilities. Whenever possible, we used low cost, widely available sensors and input devices (e.g., personal computers, gaming VR headsets, IMUs) so that the results of our work can be more readily accessed, used, and built upon by our broader research communities.

In close collaboration with Emma G. Cunningham (UW Madison), Michael J. Sack (Cornell University), and Benjamin P. Hughes, Kevin Weatherwax, Alison Crosby (all University of California, Santa Cruz) and Peter So, Alexander Moortgat-Pick, Anna Adamczyk, Dr. Daniel-Andre Dücker (all TUM), and Prof. Andriy Sarabakha (Aarhus University).

[1]

Crosby, A., et al. (2024).

[2]

Moortgat-Pick, A. et al. (2022).

[3]

Elor, A. et al. (2021).

[4]

Lakshminarayanan, S. et al. (2024).

Selected publications

- Lakshminarayanan S., Duecker, D., Sarabakha, A., Ganguly, A., Takayama, L. & Haddadin, S. Estimation of external force acting on underwater robots. 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), 3125-3131 (2024)

- Moortgat-Pick, A., So, P., Sack, M.J., Cunningham, E.G., Hughes, B.P., Adamczyk, A., Sarabakha, A., Takayama, L. & Haddadin, S. A-RIFT: visual substitution of force feedback for a zero-cost interface in telemanipulation. 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 3926-3933 (2022).

- Crosby, A., Takayama, L., Martin, E.J., Matsumoto, G.I., Kakani, K. & Caress, D.W. Beyond bunkspace: telepresence for deep sea exploration. 2024 IEEE Conference on Telepresence, 16-23 (2024).

- Elor, A., et al. Catching jellies in immersive virtual reality: a comparative teleoperation study of ROVs in underwater capture tasks. Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology, 1-10 (2021).