Data-driven algorithms for signal and information processing

The past decade has seen remarkable advances in information and signal processing technologies by building data-driven solutions, even for problems that are traditionally solved without any data. The Professorship for Machine Learning develops such data-driven algorithms and corresponding mathematical performance guarantees.

Focus Group: Machine Learning

Prof. Reinhard Heckel (TUM), Alumnus Rudolf Mößbauer Tenure Track Assistant Professor

(Image: Andreas Heddergott, TUM)

Deep learning for imaging and signal processing

An important problem in signal processing and imaging is to reconstruct a signal or image from few and noisy measurements. Until recently, the best methods for image reconstruction measurements were designed by experts on the basis of the physics of the measurement process alone, without using data.

Today, deep neural networks trained on example images perform best for many applications. For example, the newest magnetic resonance imaging scanners and the iPhone use deep networks to reconstruct high-quality images.

However, deep networks often rely on large amounts of clean data, lack theory, and can be sensitive to perturbations. To address those challenges, we develop robust algorithms and theory for deep learning-based imaging and signal reconstruction, as well as for classical signal reconstruction.

Untrained neural networks for imaging:

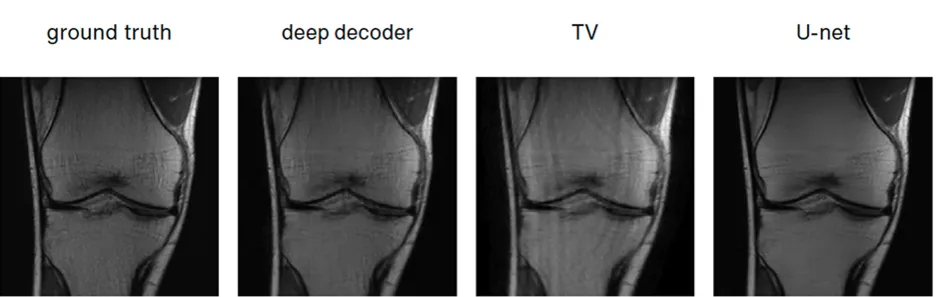

We developed the deep decoder, a neural network that reconstructs images from noisy measurements with excellent performance and without data beyond a single measurement.

For accelerated magnetic resonance imaging (MRI), the deep decoder significantly improves on classical sparsity-based methods, and its performance even approaches that of neural networks trained on large datasets (see Fig. 1).

The deep decoder and, more broadly, self-supervised networks, are applicable to a variety of image reconstruction problems. For example, they enable high-quality phase microscopy.

Moreover, unlike competing neural network-based methods, the deep decoder comes with rigorous performance guarantees: We developed mathematical denoising and signal reconstruction guarantees.

Figure 1

Foundations for neural networks trained end-to-end for signal reconstruction:

In our current work, we focus on neural networks trained on example images, as they give state-of-the-art image quality and are very fast.

We are particularly focused on developing foundations and theory. For example, we derived denoising rates for neural network-based denoising and quantified the benefits of scaling (increasing) training data and of using large transformer-based models.

Robustness:

We were developing methods that would be robust to perturbations and distribution shifts.

We demonstrated that distribution shifts, such as training on data from one hospital and testing on data from another hospital, lead to a significant degradation of performance in practice.

Motivated by this finding, we developed a new fine-tuning method that is, for the first time, robust to such distribution shifts. We also proposed data-centric approaches for improving robustness to distribution shifts and derived theoretical results for worst-case robustness.

Foundations and methods for machine learning

We developed methods for and foundations of machine learning, in particular for adaptive machine learning, statistical learning, theory for neural networks, and robust machine learning.

Adaptive machine learning:

An important challenge in machine learning is to learn from data adaptively and continuously.

We developed methods and theory for active learning. In particular, by developing theory for continual learning, we contributed to learning from data continuously without forgetting previously learned tasks.

Theory for deep learning:

Neural networks perform very well in practice, but many aspects such as common training practices and architectural choices are not well understood. We contributed to the theoretical understanding of neural networks. For example, we have shown why early cessation of the training of overparameterized neural networks can be very effective.

Robustness and uncertainty quantification:

Neural networks often perform significantly worse under distribution shifts, i.e., slight differences in training and testing data. We developed methods to improve robustness under distribution shift and are working on better understanding distribution shifts. For example, we have recently shown that for a large class of distribution shifts and models, there is a monotone relation of in- and out-of-distribution performance.

DNA data storage

Due to its longevity and enormous information density, DNA is an attractive storage medium. However, practical constraints on reading and writing DNA require the data to be stored on several short DNA fragments that cannot be ordered spatially.

We developed coding and error-correction schemes for this unique setup. With our collaborators we showed, with accelerated aging experiments, that digital information could be stored on DNA and perfectly retrieved after thousands of years.

Subsequently, we built information theoretic foundations for future DNA storage systems by characterizing the number of bits that can be stored per amount of DNA, and we are building empirical foundations by characterizing the error probabilities in practice.

We also used our system for the first commercial application of DNA storage, namely for storing Massive Attack's music on DNA, mixed into spray paint, and subsequently to store a TV series commercially for Netflix (see Fig. 2).

Figure 2

Selected publications

- R. Heckel, Deep Learning for computational imaging, Oxford University Press, 2025.

- T. Klug, K. Wang, S. Ruschke, and R. Heckel, MotionTTT: 2D Test-time-training motion estimation for 3D motion corrected MRI, NeurIPS 2024.

- Simon Wiedemann and R. Heckel, A deep learning method for simultaneous denoising and missing wedge reconstruction in cryogenic electron tomography, Nature Communications, 2024.

- M. Zalbagi Darestani, A. Chaudhari, and R. Heckel, Measuring robustness in deep learning based compressive sensing, ICML 2021 (long talk, top 3 % of submissions).

- P. L. Antkowiak, J. Lietard, M. Zalbagi Darestani, M. Somoza, W. J. Stark, R. Heckel, R. N. Grass*, Low cost DNA data storage using photolithographic synthesis and advanced information reconstruction and error correction, Nature Communications, 2020, featured as Editor’s highlight.