Can you trust your machine learning model?

The Focus Group Data Mining and Analytics studies principles for robust and trustworthy machine learning. Specifically, we are interested in learning principles for non-independent data such as graphs and temporal data.

As the number of machine learning models deployed in the real world grows, questions regarding their robustness become increasingly important. Are the models’ predictions reliable or do they change if the underlying data gets slightly perturbed? In particular, for safety-critical and scientific use cases, it is essential to assess the models’ vulnerability to worst-case perturbations – ensuring that we can trust the machine learning model even in the worst case.

Focus Group Data Mining and Analytics

Prof. Stephan Günnemann (TUM), Rudolf Mößbauer Tenure Track Professor | Marin Bilos, Aleksandar Bojchevski, Bertrand Charpentier, Maria Kaiser, Johannes Klicpera, Anna Kopetzki, Aleksei Kuvshinov, Armin Moin, Oleksandr Shchur, Daniel Zügner (TUM), Doctoral Candidates | Host: Data Analytics and Machine Learning, TUM

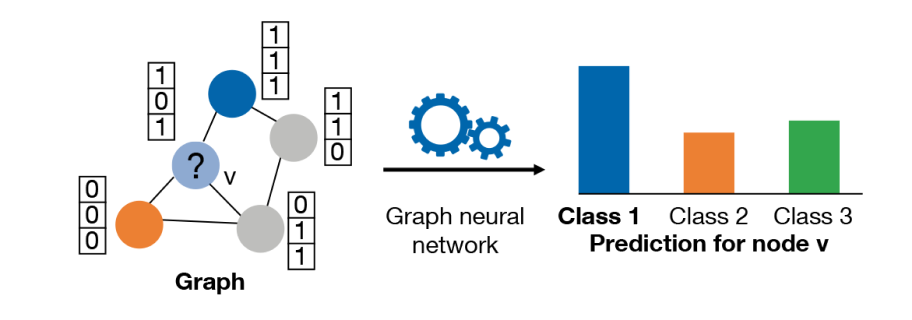

Robustness of graph neural networks

In this regard, our group specifically focuses on graph neural networks. Indeed, graph neural networks (GNNs) have emerged as the de facto standard for many learning tasks, significantly improving performance over the previous state-of-the-art. They are used for various high-impact applications across many domains, such as molecular property prediction [1], protein interface prediction, fraud detection, and breast cancer classification. And indeed, as we have shown in our work [2], GNNs are highly non-robust with respect to deliberate perturbations on both the graph structure and the node at tributes, making their outcomes highly unreliable. Likewise, in a follow-up study, we generalized these results to unsupervised node embeddings [3].

Motivated by these insights, our group developed the first principles for provable robustness of GNNs: Given a certain space of perturbations, the goal is to provide a guarantee that no perturbation exists that will change the prediction. That is, in this case one can trust the prediction. As it turns out, providing such so-called “robustness certificates” is extremely challenging due to the discreteness of the graph structure, the non-linearity of the neural network, and interactions taking place in the graph neural network. Despite these challenges, in our work [4] we provided the first robustness certificate for perturbations to the nodes’ attributes, while in [5] we proposed the first certificate for graph structure perturbation. Thus, for the first time we can give guarantees about a GNN’s behavior. Likewise, both works show how one can improve the robustness of the GNNs by adapting an enhanced training procedure. Overall, these works significantly extend the applicability of GNNs, paving the way for their use in scientific and critical application domains where reliable predictions are essential.

Uncertainty in ML models for temporal data

In a second line of research, we tackled the aspect of uncertainty. Quantifying uncertainty in neural network predictions is a key issue in making machine learning reliable. In many sensitive domains, including robotics, financial, and medical areas, giving autonomy to AI systems is highly dependent on the trust we can assign to them. In addition, being capable of informing humans, AI systems have to be aware about their predictions’ uncertainty, allowing them to adapt to new situations and refrain from taking decisions in unknown or unsafe conditions.

In [6], we proposed the first method that incorporates uncertainty when predicting a sequence of discrete events that occur irregularly over time. This is a common data type generated naturally in our everyday interactions with the environment. Examples include messages in social networks, medical histories of patients in healthcare, and integrated information from multiple sensors in complex systems such as cars. We present two new architectures, modeling the evolution of the distribution on the probability simplex. In both cases, we make it possible to express rich temporal evolution of the distribution parameters, and we naturally capture uncertainty. In the experiments, state-of-the-art models based on point

processes are clearly outperformed for event and time prediction as well as for anomaly detection. Particularly noteworth is that our methods give uncertainty estimates for free.

[1]

J. Klicpera, J. Groß and S. Günnemann, “Directional Message Passing for Molecular Graphs”, International Conference on Learning Representations (ICLR), 2020.

[2]

D. Zügner, A. Akbarnejad and S. Günnemann, “Adversarial Attacks on Neural Networks for Graph Data”, ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD), 2018.

[3]

A. Bojchevski and S. Günnemann, “Adversarial Attacks on Node Embeddings via Graph Poisoning”, International Conference on Machine Learning (ICML), PMLR 97:695-704, 2019.

[4]

D. Zügner and S. Günnemann, “Certifiable Robustness and Robust Training for Graph Convolutional

Networks”, ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD), 2019.

[5]

A. Bojchevski and S. Günnemann, “Certifiable Robustness to Graph Perturbations”, Neural

Information Processing Systems (NeurIPS), 2019.

[6]

M. Biloš, B. Charpentier and S. Günnemann, “Uncertainty on Asynchronous Time Event Prediction”, Neural Information Processing Systems (NeurIPS), 2019.